Measurement Framework

What is the MANRS Measurement Framework?

To measure MANRS readiness for a particular network a set of metrics has been proposed, one for each action. For example, to measure to what degree Filtering (Action 1) is implemented we will measure the number of routing incidents where the network was implicated either as a culprit or an accomplice and their duration. That will produce a number – an indication of the degree of compliance, or a MANRS readiness index (MR-index) for Action1 for a specified period of time.

The measurements are passive, which means that they do not require cooperation for a measured network. That allows us to measure the MR-indices not only for the members of the MANRS initiative, but for all networks in the Internet (at the moment more than 60,000).

Calculation of Metrics and Data sources

Consolidation of Multiple Events

In the current model, only routing incidents related to the network in question and adjacent networks are taken into account.

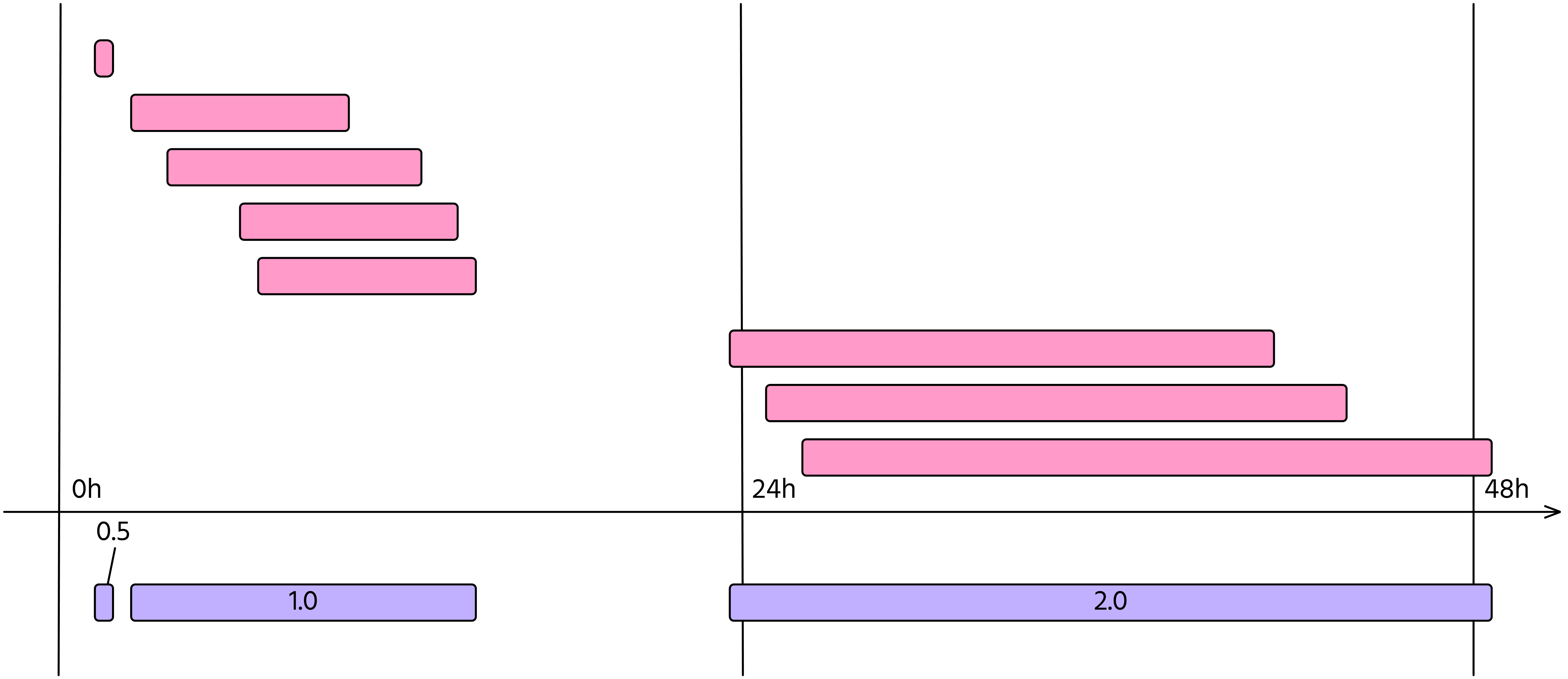

Non-action is penalized. The longer the incident takes place, the heavier it is rated. For example, the following coefficients are used:

< 30min = 0.5

< 24hour = 1

> 24hour = +1 for each subsequent 24-hour period

Also, multiple routing changes may be part of the same configuration mistake. For this reason, events related to the same metric that share the same time span are merged into an incident. This is shown in Figure 1.

Figure 1. Routing changes, or events (in pink), may be part of the same incident (violet). In this case an operator experienced three incidents with a duration of 29 minutes, 13 hours, and 25 hours respectively. The resulting metric will be M=0.5 + 1 + 2 = 3.5

Based on this approach, for each of the MANRS actions, we can devise a composite MR-index and define thresholds for acceptable, tolerable and unacceptable – informing the members of their security posture related to MANRS.

A summary table of the metrics is provided below. A lower value indicates a higher grade of MANRS readiness.

Metrics

| Action | Metric | Description | Data source(s) |

|---|---|---|---|

| Filtering | M1 | Route leak by the AS Calculates incidents where the AS was the culprit of BGP leakage events. In the example on Fig 1. if all pink events are route leaks by the AS, M1=3.5. This metric acts as a warning and does not contribute to the MANRS Readiness score. | bgpstream |

| M2 | Route misorigination by the AS Calculates incidents where the AS was the culprit of BGP misorigination (hijacking) events. | GRIP/ROAST/RIPEstat | |

| M1C | Route leak by a direct customer Calculates incidents where the AS was an accomplice (the misoriginating AS was present in the AS-PATH) to BGP leakage events. Currently only incidents related to adjacent networks are taken into account. This metric acts as a warning and does not contribute to the MANRS Readiness score. | bgpstream | |

| M2C | Route misorigination by a direct customer Calculates incidents where the AS was an accomplice (the leaking AS was present in the AS-PATH) to BGP hijack events. Currently only incidents related to adjacent networks are considered. | GRIP/ROAST/RIPEstat | |

| M3 | Bogon prefixes* by the AS. Calculates incidents where the AS originated bogon address space. Note that the duration of each incident is counted per day as the data in the CIDR report is available only on a daily basis. Like with leaks and hijacks all prefixes originated by the AS on a day counted as 1 incident. | CIDR report/NRO/Routinator/RFCs | |

| M3C | Bogon prefixes* propagated by the AS. Calculates incidents where the AS propagated bogon address space announcements received from its peers. | CIDR report/NRO/Routinator/RFCs | |

| M3CAdmin | Administrative bogon prefixes** propagated by the AS. Calculates incidents where the AS propagated bogon address space announcements received from its customers. This metric acts as a warning and does not contribute to the MANRS Readiness score. | CIDR report/NRO | |

| M4 | Bogon ASNs* by the AS Calculates incidents where the AS announced bogon ASNs as adjacency. Note that the duration of each incident is counted per day as the data in the CIDR report is available only on a daily basis. | CIDR report/NRO/Routinator/RFCs | |

| M4C | Bogon ASNs* propagated by the AS Calculates incidents where the AS propagated bogon ASNs announcements it received from its peers. Note that the duration of each incident is counted per day as the data in the CIDR report is available only on a daily basis. | CIDR report/NRO/Routinator/RFCs | |

| M4Admin | Administrative bogon prefixes** originated or propagated by the AS. This metric acts as a warning and does not contribute to the MANRS Readiness score. | CIDR report/NRO | |

| M4CAdmin | Administrative bogon ASNs** propagated by the AS. Calculates incidents where the AS propagated administrative bogon ASNs announcements it received from its customers. This metric acts as a warning and does not contribute to the MANRS Readiness score. | CIDR report/NRO | |

| M9 | Route Origin Validation Average degree of protection of network users against incorrect announcements using RPKI route origin validation (ROV). | APNIC I-Rov Filtering Rate | |

| Anti-spoofing | M5 | IP Spoofing by the AS Calculated as follows: M5 = 0 (if only positive tests are recorded) M5 = 0.5 (if no tests are found) M5 = # of negative tests in separate network segments (otherwise) Where a negative test indicates that spoofed traffic was not blocked. | CAIDA Spoofer |

| Coordination | M8 | Contact registration Checks if the ASN has registered contact information. For the whois, based on the authority source we check if any of the following are present: RIPE: [‘admin-c’, ‘tech-c’]; APNIC: [‘admin-c’, ‘tech-c’]; AFRINIC: [‘admin-c’, ‘tech-c’]; ARIN: [‘OrgTechRef’, ‘OrgNocRef’]; LACNIC: [‘person’, ’email’, ‘phone’]; PeeringDB: [1 Maintenance, 3 Technical, 4 NOC] Abuse contact information is not considered for this metric. M8 = 0 – contact information is present M8 = 1 – no contact information | RIPEstat PeeringDB |

| Quality of Routing Information | M7IRR | Not registered routes. Calculates the percentage of announced routes originated by the AS that are not registered in an IRR as route objects. More specific routes that are advertised and covered by a less specific route object are also considered registered. | RIPEstat PeeringDB |

| M7RPKI | Not registered ROAs. Calculates the percentage of the announced routes originated by the AS that are not covered by any ROA in RPKI | ROAST | |

| M7RPKIN | Invalid routes. Calculates the percentage of the announced routes originated by the AS that are invalidated by a corresponding ROA | ROAST | |

| M7Conflict | IRR and RPKI conflicts. Indicates any routes with the ASN as an origin registered in IRR which aren’t announced by that ASN in BGP and do not have a corresponding ROA, or are invalidated by a ROA. Issued as a warning and is not counted as incidents and do not affect the MANRS readiness score. | RIPEStat/ROAST |

*Bogon prefixes:

- IPv4: https://www.iana.org/assignments/iana-ipv4-special-registry/iana-ipv4-special-registry.xhtml

- IPv6: https://www.iana.org/assignments/iana-ipv6-special-registry/iana-ipv6-special-registry.xhtml

- All unallocated space – Includes all space unallocated to the RIRs and space marked as “available” by an RIR

**Administrative bogon prefixes

- Address space managed by the APNIC, ARIN or the RIPE NCC and marked as “Reserved” with this status for more than 180 days.

*Bogon ASNs:

- https://www.iana.org/assignments/iana-as-numbers-special-registry/iana-as-numbers-special-registry.xhtml

**Administrative bogon ASNs

- ASNs managed by the AFRINIC, APNIC, ARIN, LACNIC or the RIPE NCC and marked as “Reserved” with this status for more than 180 days.

Metric Normalization and MANRS readiness scores(MRS)

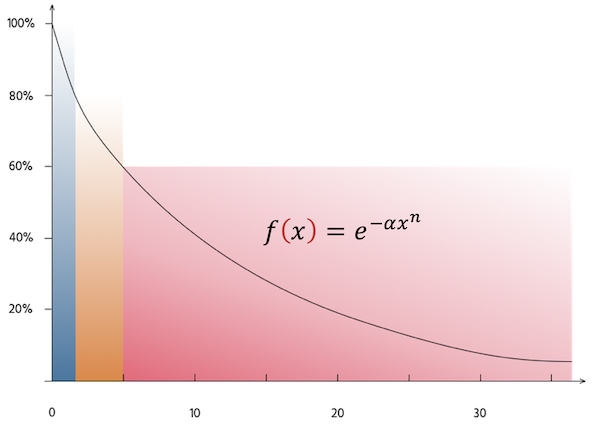

Metrics M1, M1C, M2, M2C, M3, M3C, M4, M4C and M5 do not have an upper limit (e.g.there may be arbitrary many incidents) and, therefore, it is necessary to normalize these values. We use the following function to normalize these metrics and calculate the MANRS readiness scores(MRS) of a metric M: M_SCORE=𝑀𝑅𝑆(𝑀) = 𝑒−𝛼𝑀𝑛.

The function depends on two parameters, 𝛼 and 𝑛, both set by default to 0.5. We offer a predefined function, which can be called with zero to two interpolation points. This function calculates the parameters 𝛼and 𝑛according to the following logic:

- If no interpolation points are given, the default values are used.

- If one interpolation point (𝑥1,𝑦1)is given, 𝛼is calculated such that 𝑓(𝑥1)=𝑦1. Restrictions: 𝑥1>0,0<𝑦1<1.< /li>

- If two interpolation points (𝑥1,𝑦1),(𝑥2,𝑦2)are given, 𝛼and 𝑛are calculated –if possible –such that 𝑓(𝑥1)=𝑦1,𝑓(𝑥2)=𝑦2. Same restrictions as above, additionally 𝑥1≠𝑥2,𝑦1≠𝑦2

MRS(M) = e–aMn

Figure 2 Normalizing an arbitrary value of a metric into 0 – 1 range. Blue, Amber and Red bars depict level of MANRS Readiness (Ready, Aspiring and Lagging).

For metrics M7IRR, M7RPKI, M7RPKIN and M8 the score is calculated as 1-M. For example, for M7IRR=0.9 (90% of the prefixes are not registered), the M7IRR_SCORE=1-0.9=0.1 (10% of all prefixes are registered).

Current configuration

The current configuration uses a function calculating the complement of a given percentage values and the proposed function with interpolation. The interpolation points were chosen in the way described in the following paragraphs. For the normalization with the proposed function, the boundaries for “normalized ready” was set to 80% (0.8), for “normalized aspiring” to 60% (0.6).

Filtering

For filtering the MANRS readiness score is defined as an average of corresponding scores for metrics M1, M1C, M2, M2C, M3, M3C, M4, M4C.

MRS_Filtering=(M1_SCORE+M1C_SCORE+M2_SCORE+M2C_SCORE+M3_SCORE+M3C_SCORE+M4_SCORE+M4C_SCORE)/8

The absolute values define the readiness as follows:

- ≤1.5: Ready

- 1.5−5: Aspiring

- ≥5: Lagging

The interpolation values are chosen in the way described above, that is, the two interpolation points were chosen to be [1.5, 0.8] and [5, 0.6].

Anti-spoofing

MRS_Anti-Spoofing=M5_SCORE

The idea is the same as filtering, only are the boundaries different:

- 0: Ready

- 0.5: Aspiring

- 1: Lagging

As the proposed functions already runs through [0, 1] by construction, only one interpolation point needs to be defined, i.e. we chose [0.5, 0.6].

Coordination

MRS_Coordination=M8_SCORE

Since coordination is delivered as 0/1-value, it is reasonable to see them as percentages. In this case 0 represents the fact, that contact information is present and 1 that no contact information is present. For Coordination, the absolute values define the readiness as follows:

- 0: Ready

- 1: Lagging

We mapped the boundaries for the normalized values accordingly:

- 1: Ready

- 0: Lagging

Routing Information (IRR, RPKI)

Same mapping/concept as for coordination, as the values delivered are already percentages.

MRS_Global_Validation_IRR=M7IRR_SCORE

Since for RPKI we need to take into account not only properly registered prefixes, but also the ones that are invalidated by a ROA (suggesting that the ROA is incorrect), the calculation is slightly different:

MRS_Global_Validation_RPKI=max (0;M7RPKI_SCORE-10*M7RPKIN_SCORE)

For routing information, the absolute values define the readiness as follows:

- ≤0.1: Ready

- 0.1−0.5: Aspiring

- >0.5: Lagging

We mapped the boundaries for the normalized values accordingly:

- ≥0.9: Ready

- 0.9−0.5: Aspiring

- <0.5: Lagging

The overview of absolute and normalized values and the thresholds is presented in the table below:

| Metric | Absolute | Normalized | ||||

|---|---|---|---|---|---|---|

| Ready | Aspiring | Lagging | Ready | Aspiring | Lagging | |

| Filtering | <1.5 | 1.5-5 | >5 | ≥80% | 60-80% | <60% |

| Anti-spoofing | 0 | 0.5 | ≥1 | >60% | 60% | <60% |

| Coordination | 0 | – | 1 | 100% | – | 0% |

| Routing Information IRR | <0.1 | 0.1-0.5 | >0.5 | ≥90% | 50-90% | <50% |

| Routing Information RPKI | <0.1 | 0.1-0.5 | >0.5 | ≥90% | 50-90% | <50% |