BGP super-blunder: How Verizon today sparked a ‘cascading catastrophic failure’ that knackered Cloudflare, Amazon, etc

This article was originally published at The Register website. The views expressed in this article are those of the author alone and not the Internet Society/MANRS.

Updated: Verizon sent a big chunk of the internet down a black hole this morning – and caused outages at Cloudflare, Facebook, Amazon, and others – after it wrongly accepted a network misconfiguration from a small ISP in Pennsylvania, USA.

For nearly three hours, web traffic that was supposed to go to some of the biggest names online was instead accidentally rerouted through a steel giant based in Pittsburgh.

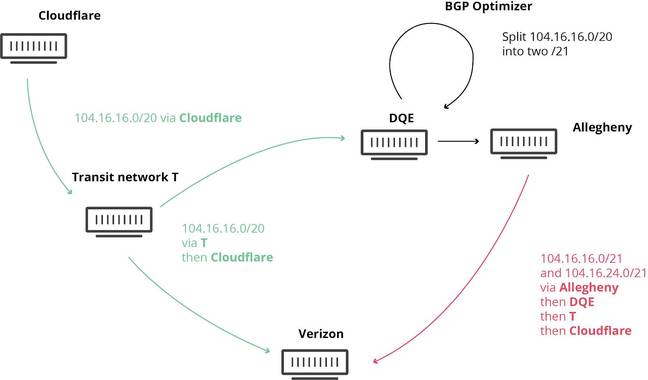

It all started when new internet routes for more than 20,000 IP address prefixes – roughly two per cent of the internet – were wrongly announcedby regional US ISP DQE Communications: this announcement informed the sprawling internet’s backbone equipment to thread netizens’ traffic through DQE and one of its clients, steel giant Allegheny Technologies, a redirection that was then, mindbogglingly, accepted and passed on to the world by Verizon, a trusted major authority on the internet’s highways and byways. This happened because Allegheny is also a customer of Verizon: it too announced the route changes to Verizon, which disseminated them further.

And so, systems around the planet were automatically updated, and connections destined for Facebook, Cloudflare, and others, ended up going through DQE and Allegheny, which buckled under the strain, causing traffic to disappear into a black hole.

Internet engineers blamed a piece of automated networking software – a BGP optimizer built by Noction – that was used by DQE to improve its connectivity. And even though these kinds of misconfigurations happen every day, there is significant frustration and even disbelief that a US telco as large as Verizon would pass on this amount of incorrect routing information.

Routes across the internet change pretty much constantly, rapidly, and automatically 24 hours a day as the internet continuously reshapes itself as links open and close. A lot breaks and is repaired without any human intervention. However, a sudden large erroneous change like today’s route change should have been caught by filters within Verizon and never accepted and disseminated.

“While it is easy to point at the alleged BGP optimizer as the root cause, I do think we now have observed a cascading catastrophic failure both in process and technologies,” complained Job Snijders, an internet architect for NTT Communications, in a memo today on a network operators’ mailing list.

That concern was reiterated in a conversation with the chief technology officer of one of the organizations most severely impacted by today’s BGP screw-up: Cloudflare. CTO John Graham-Cumming told The Register a few hours ago that “at its worst, about 10 per cent of our traffic was being directed over to Verizon.”

“A customer of Verizon in the US started announcing essentially that a very large amount of the internet belonged to them,” Graham-Cumming told El Reg‘s Richard Speed, adding: “For reasons that are a bit hard to understand, Verizon decided to pass that on to the rest of the world.”

He scolded Verizon for not filtering the change out: “It happens a lot,” Graham-Cumming said of BGP leaks and misconfigurations, “but normally [a large ISP like Verizon] would filter it out if some small provider said they own the internet.”

Time to fix this

Although internet engineers have been dealing with these glitches and gremlins for years thanks to the global network’s fundamental trust approach – where you simply trust people not to provide the wrong information – in recent years BGP leaks have gone from irritation to a critical flaw that techies feel they need to fix.

Criminals and government-level spies have realized the potential in such leaks for grabbing shed loads of internet traffic: troves of data that can then be used for a variety of questionable purposes, including surveillance, disruption, and financial theft.

And there are technical fixes – as we explained the last time there was a big routing problem, which was, um, earlier this month.

One key industry group called Mutually Agreed Norms for Routing Security (MANRS) has four main recommendations: two technical and two cultural for fixing the problem.

The two technical approaches are filtering and anti-spoofing, which basically check announcements from other network operators to see if they are legitimate and remove any that aren’t; and the cultural fixes are coordination and global validation – which encourage operators to talk more to one another and work together to flag and remove any suspicious looking BGP changes.

Verizon is not a member of MANRS.

“The question for Verizon is: why did you not filter out the routes that were coming from this small network?” asked Cloudflare’s Graham-Cumming.

And as it happens, we have asked Verizon exactly that questions, as well as whether it will join the MANRS group. We have also asked DQE Communications – the original source of the problem – what happened and why. We’ll update this story if and when they get back. ®

Updated to add

Verizon sent us the following baffling response to today’s BGP cockup: “There was an intermittent disruption in internet service for some [Verizon] FiOS customers earlier this morning. Our engineers resolved the issue around 9am ET.”

Er, we think there was “an intermittent disruption” for more than just “FiOS customers” today.

Meanwhile, a spokesperson for DQE has been in touch to say:

Earlier this morning, DQE was alerted that a third-party ISP was inadvertently propagating routes from one of our shared customers downstream, impacting Cloudflare’s services. We immediately examined the issue and adjusted our routing policy, ameliorating Cloudflare’s situation and allowing them to resume normal operations. DQE continuously monitors its network traffic and responds quickly to any incidents to ensure maximum uptime for its customers.

Leave a Comment